Information Model for LAE

History

At least Four Times Presentation: 2009 ~ 2011

Embedding Real Character into ARC continuum world

Interaction of Real Characters and ARC continuum world.

NWIP: 2012. Dec.(ISO/IEC 18521)

ARC concepts and reference model – Part1 : Reference Model

ARC concepts and reference model – Part2 : Physical Sensors

ARC concepts and reference model – Part3 : Real Character Representation

WD: 2013 ~ Present

- ARC -> MAR

- By reflecting new reference model of MAR

Ver.5 of MAR-RM : July, 2014

ISO/IEC WD 18521‑3, Version 3

Information technology — Computer graphics, image processing and environmental representation — Mixed and Augmented Reality Concepts and Reference Model – Part 3: Real Character Representation

MARRC-RM: A MAR Reference Model for Real Character Representation

ISO/IEC WD 18521-3 was removed.

At Aug. 2014, it was changed as “Live Actor and Entity Representation in MAR”

Current Status

Information technology — Computer graphics, image processing and environmental representation — Live Actor and Entity Representation in Mixed and Augmented Reality We are writing WD for “Live Actor and Entity Representation in MAR”

Scope

- Definition of live actor and entity

- Representation of live actor and entity

- Representation of properties of live actor and entity

- Sensing of live actor and entity in a real world

- Integration of live actor and entity into a 3D virtual scene

- Interaction between live actor/entity and objects in a 3D virtual scene

- Transmission of information among live actors/entities in MAR worlds

Normative References

- ISO/IEC 11072:1992, Information technology -- Computer graphics -- Computer Graphics Reference Model

- ISO/IEC 19775-1.2:2008, Information technology — Computer graphics and image processing — Extensible 3D (X3D)

- ISO/IEC 14772:1997, Information technology -- Computer graphics and image processing -- The Virtual Reality Modelling Language (VRML) -- Part 1: Functional specification and UTF-8 encoding

- ISO/IEC CD 18039, Information technology -- Computer graphics and image processing – Mixed and Augmented Reality Reference Model, August, 2015

- ISO/IEC 200x, Information technology -- Computer graphics and image processing – Physical Sensor Representation in mixed and augmented reality, August, 2015

Terms, Definitions and Symbols

- Physical scene: An image or video which be captured from “real world capturer” in a real world

- Physical data: Data which be captured from “real world capturer” or “pure sensor” in a real world (LAE-physical data, nonLAE-physical data)

- Static object : An object which cannot be translated, rotated and scaled in a real world or a virtual world

- Dynamic object : An object which can be translated, rotated and scaled in a real world or a virtual world

- Augmented object : An object to be augmented

- Live actor and entity: A representation of a living physical/real user/actor in the MAR content or system

- Motion Volume: A volume where a live actor and entity can be movable in a real world or in a virtual world

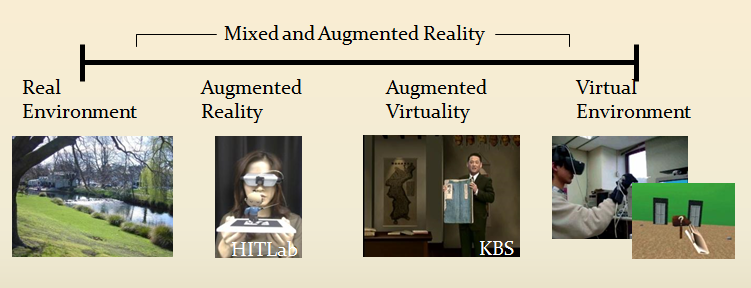

Concepts - overview

- MAR reference model(MAR-RM): ISO/CD 18039

- Definitions, objectives, scopes, embedding, interaction, and functions of the system for representing a live actor and entity. The live actor and entity in this standard is defined as a representation of a physical living actor and object in the MAR content or system.

- An MAR world consists of a 3D virtual world and directly sensed and computer-generated information including any combination of aural, visual, touch, smell and taste.

- an MAR world constructed by integrating a 3D virtual world and live actors, captured from a general camera and/or depth camera sensors.

- Here, the 3D virtual world describes real-like educational space in a library, live actors, whose roles are learners as well as teachers, participate in the 3D virtual educational world and how is their participation represented in the world.

- An integrative combination application of 3D videoconferencing, reality-like communication features, presentation/application sharing, and 3D model display within mixed environment.

- Once live actor and entity in the real world are integrated into a 3D virtual world, their location, movements and interactions should be represented precisely in the 3D virtual world.

- In the MAR applications, live actor and entity to be embedded in a 3D virtual world must be defined, and their information such as location, actions, and sensing data from their holding equipment, must be able to be transferred between the MAR applications, and between a 3D virtual world and a real world.

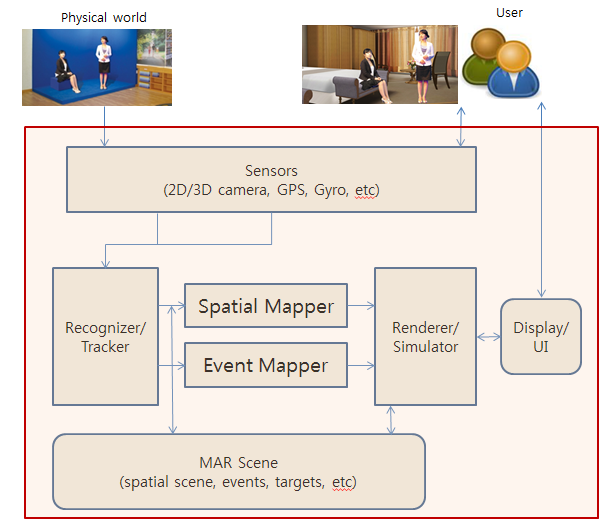

Concepts - components

- Sensing of real actor and entity in a real world from input devices such as (depth) camera.

- Sensing of information for interaction from input sensors.

- Recognizing and tracking of live actor and entity in a real world

- Recognizing and tracking of events made by real actor and entity in a real world

- Recognizing and tracking of events captured by sensors themselves in a real world

- Representation of physical properties of live actor and entity in a 3D virtual world

- Spatial Control of live actor and entity into a 3D virtual world

- Event Interface between live actor/entity and a 3D virtual world

- Composite Rendering of live actor/entity into a 3D virtual world

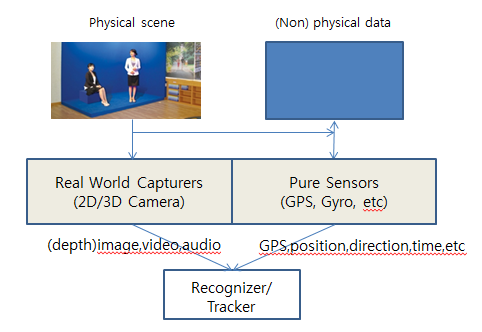

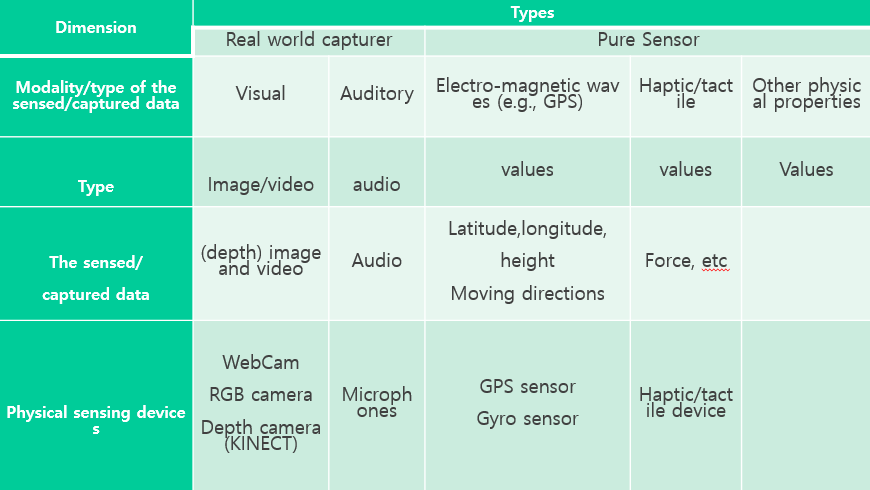

Sensors for Capturing Live Actors and Entities

Live actors and entities in real world can be captured from a hardware and (optionally) software sensor which are able to measure any kind of physical property. LAE-physical sensor or nonLAE—physical sensor data which can be captured from “real world capturer” and “pure sensor”.

Sensors for Capturing Live Actors and Entities

Input: Real world signals

Output: Sensor data related to representation of live actor and entity

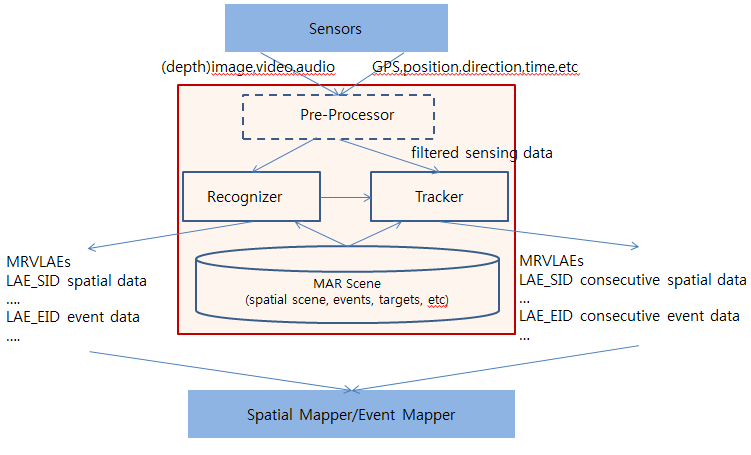

Recognizer/Tracker of Live Actors and Entities

- Pre-processor

- Recognizer

- Tracker

- Pre-processing:

- The sensing data may be pre-processed to give better sensing data for doing spatial mapping and event mapping of live actor and entity into a MAR world.

- The pre-processing functions can be composed of filtering, color conversion, and background removal, chromakeying, and depth information extraction for captured data by using techniques in area of computer vision. The pre-processing work is applied to provide good information for representing live actor and entity.

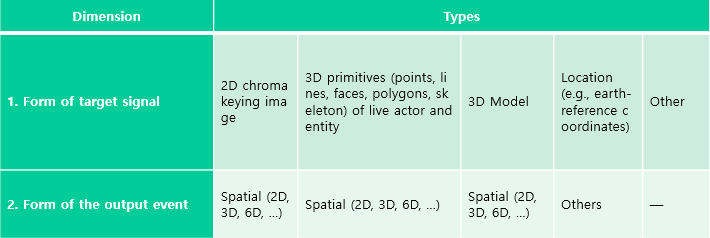

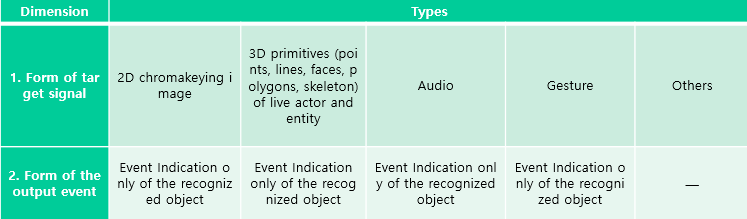

- Recognizer is used to recognize live actors and/or entities and their events in pre-processed sensing data.

- Tracker is to recognize consecutive spatial and event data for live actors and/or entities. Work of two components can be done by using information stored into MAR scene.

- Tracker:

- Input: sensing data related to representation of live actor and entity.

- Output: Instantaneous values of the characteristics of the recognized live actor and entity.

- Recognizer:

- Input: Sensing data related to representation of live actor and entity in a MAR world

- Output: At least one event acknowledging the recognition

- Event representation:

- The events of live actor and entity can be appeared by their actions themselves as well as outputs obtained from sensing interface devices that they have.

- Two types: sensing data by live actor and entity themselves or sensing data of devices held by live actor and entity.

- The defined events will be stored into an event database(Event DB) (MAR scene). .

- Sensing outputs captured from “real world capturer” Gestures – hands, fingers, head, body Facial expressions Speech & Voice

- Sensing outputs captured from “pure sensor” AR marker GPS(global positioning system) Remote Wii motion data Other sensing data by a smart device: the three-axis accelerometer, the magnetometer (digital compass)

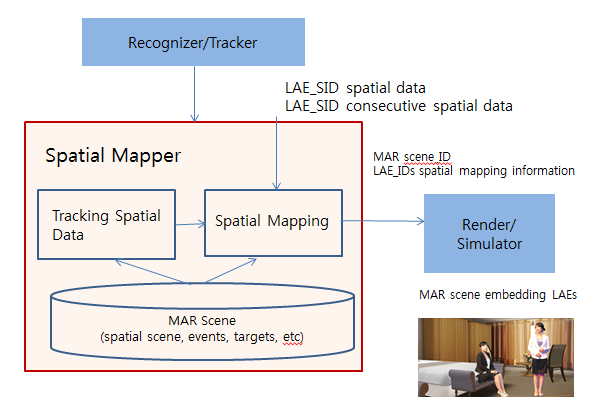

Spatial Mapper of Live Actors and Entities

- to provide spatial relationship information (position, orientation, scale and unit) between the physical space and the space of the MAR scene by applying the necessary transformations for the calibration of live actor and entity.

- The spatial reference frames and spatial metrics used in a given sensor needs to be mapped into that of the MAR scene so that the sensed live actor and entity can be correctly placed, oriented and sized. The spatial relationship between a particular sensor system and an MAR space is provided by the MAR experience creator and is maintained by the Spatial Mapper.

- Input: Sensor identifier (SID) and sensed spatial information of live actor and entity.

- Output: Calibrated spatial information of live actor and entity for the given MAR scene.

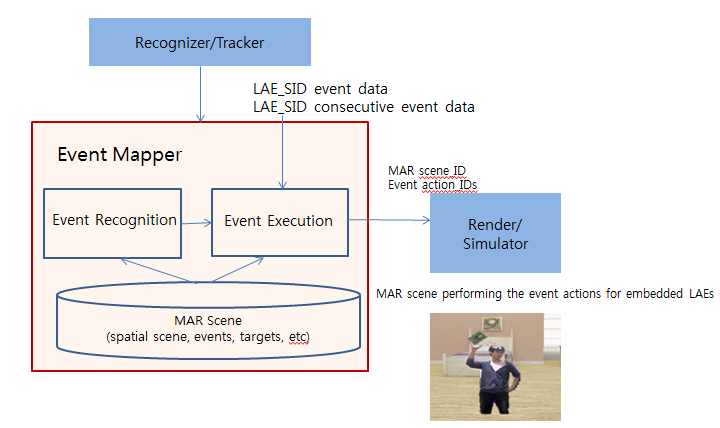

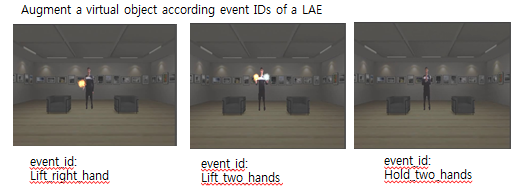

Event Mapper of Live Actors and Entities

- Event Mapper creates an association between a MAR event of live actor and entity, obtained from the Recognizer or the Tracker, and the condition specified by the MAR Content Creator in the MAR scene.

- The input and output of the Event Mapper are: Input: Event identifier and event information. Output: Translated event identifier for the given MAR scene. system and an MAR space is provided by the MAR experience creator and is maintained by the Spatial Mapper.

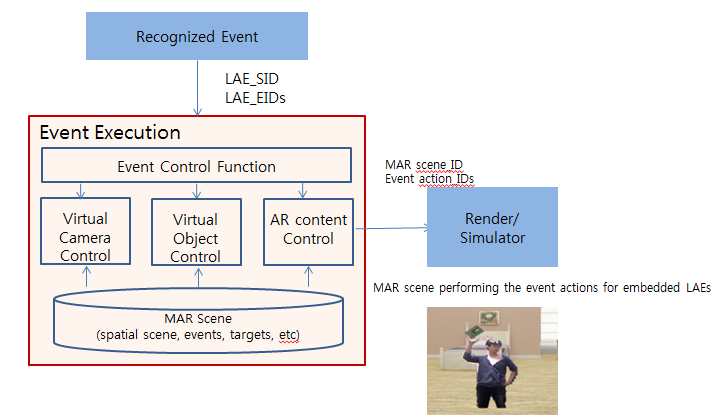

- A detected event is transferred in the event class EventClass, in an execution module. If the detected event is one of the events defined in an MAR system, the event corresponding to the recognized event will be applied to the object in the 3D virtual space by the event executor (EventExecutor). In other words, the object in the 3D virtual space will be manipulated according to the corresponding event function.

- Event execution: event control function/(MAR object) control

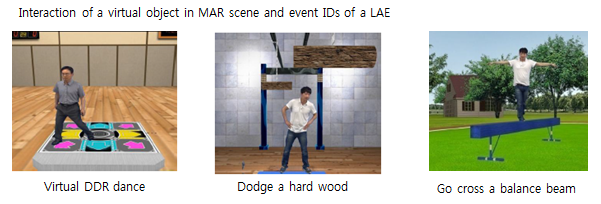

- Event execution examples

Conformance

- Sensing of Live actors and entities should be included in a MAR world

- Provide MAR scene for processing tracking and recognizing live actors and entities in a MAR world

- Provide Tracker of live actors and entities

- Provide Recognizer of live actors and entities

- Provide Spatial Mapper of live actors and entities into a MAR world

- Provide Event Mapper between live actors and entities and a MAR world